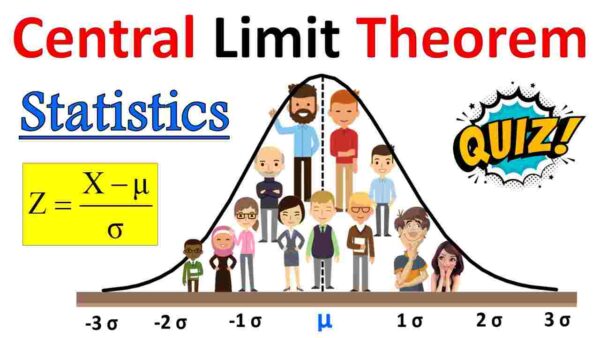

The Central Limit Theorem (CLT) is a fundamental concept in statistics that states that the sampling distribution of the mean of a random sample, regardless of the shape of the population distribution, approaches a normal distribution as the sample size increases. In other words, when you take repeated random samples from a population and calculate the means of those samples, the distribution of those sample means will be approximately normal, regardless of the shape of the original population.

Here are a few examples to illustrate the Central Limit Theorem:

- Coin Flips: Suppose you have a fair coin and you want to examine the distribution of the number of heads in a sample of coin flips. If you flip the coin once, the distribution will be a discrete uniform distribution (either 0 or 1 head). However, as you increase the sample size and flip the coin multiple times, the distribution of the sample means (number of heads divided by the sample size) will become approximately normal. This is because the mean of the sample means will converge to the population mean, and the standard deviation of the sample means will converge to zero.

- Heights of Individuals: Consider the heights of individuals in a population. The population distribution of heights may not follow a normal distribution. However, if you take random samples of individuals and calculate the mean height in each sample, the distribution of those sample means will tend to follow a normal distribution. As the sample size increases, the sample means will cluster around the population mean height, and the spread of the sample means will decrease.

- Exam Scores: Suppose you want to investigate the distribution of exam scores in a large class. The individual scores may not follow a normal distribution, as some students may score very high or very low. However, if you take random samples of scores and calculate the average score in each sample, the distribution of those sample means will approximate a normal distribution. This is true as long as the sample sizes are reasonably large and the scores are independent of each other.

In all these examples, the Central Limit Theorem allows us to make inferences about the population based on the distribution of sample means. It provides a powerful tool for statistical analysis and hypothesis testing, as it allows us to use the properties of the normal distribution to make statistical inferences, even if the population distribution is not normal.

🎬 𝐂𝐞𝐧𝐭𝐫𝐚𝐥 𝐋𝐢𝐦𝐢𝐭 𝐓𝐡𝐞𝐨𝐫𝐞𝐦 𝐒𝐭𝐚𝐭𝐢𝐬𝐭𝐢𝐜𝐬 : https://youtu.be/a7szVlUy9dU

🎬 𝐋𝐚𝐰 𝐨𝐟 𝐋𝐚𝐫𝐠𝐞 𝐍𝐮𝐦𝐛𝐞𝐫𝐬 : https://youtu.be/RKNgLs4m6WQ

The Central Limit Theorem (CLT) and the Law of Large Numbers (LLN) are both fundamental concepts in statistics, but they address different aspects of sampling and probability theory.

The Law of Large Numbers states that as the sample size increases, the sample mean (or any other sample statistic) converges to the population mean. In other words, as you take larger and larger samples from a population, the average of those samples will get closer and closer to the true population mean. The Law of Large Numbers is concerned with the behavior of sample statistics as the sample size grows, and it provides a foundation for estimation.

On the other hand, the Central Limit Theorem is concerned with the behavior of the sampling distribution of the mean. It states that as the sample size increases, the sampling distribution of the mean approaches a normal distribution, regardless of the shape of the population distribution. The CLT is about the shape of the distribution of sample means, not the convergence of the sample mean to the population mean. It allows us to make inferences about population parameters based on the distribution of sample means, assuming certain conditions are met.

To summarize the relationship between the CLT and the LLN:

- Law of Large Numbers: It focuses on the convergence of sample statistics (such as the sample mean) to the population parameter (such as the population mean) as the sample size increases.

- Central Limit Theorem: It focuses on the shape of the sampling distribution of the mean, stating that it becomes approximately normal as the sample size increases, regardless of the shape of the population distribution.

In practice, the CLT and LLN are often used together. The LLN provides the theoretical basis for the sample mean converging to the population mean, while the CLT allows us to make probabilistic statements about the distribution of sample means and construct confidence intervals or perform hypothesis testing.

I hope this blog helped in understanding the basic concept in a simplified manner, watch out for more such stuff in the future.

📢📢 𝑺𝒐𝒄𝒊𝒂𝒍 𝑴𝒆𝒅𝒊𝒂 𝑳𝒊𝒏𝒌:

Thanks!!!

For questions please leave them in the comment box below and I’ll do my best to get back to those in a timely fashion. And remember to subscribe to Digital eLearning YouTube channel to have our latest videos sent to you while you sleep.

✍️ 𝓓𝓲𝓼𝓬𝓵𝓪𝓲𝓶𝓮𝓻: Copyright Disclaimer under section 107 of the Copyright Act of 1976, allowance is made for “fair use” for purposes such as criticism, comment, news reporting, teaching, scholarship, education and research. Fair use is a use permitted by copyright statute that might otherwise be infringing. The information contained in this video is just for educational and informational purposes only and does not have any intention to mislead or violate Google and YouTube community guidelines or policy. I respect and follow all terms & conditions of Google & YouTube.